- 9:15 – 10:15 a.m. Sparks of Artificial General Intelligence, Yin Tat Lee (Microsoft Research)

- 11 a.m. – 12 p.m. Possible Impossibilities and Impossible Possibilities, Yejin Choi (University of Washington)

- 1:30 – 2:30 p.m. Towards Reliable Use of Large Language Models: Better Detection, Consistency, and Instruction-Tuning, Christopher D. Manning (Stanford University)

- 3 – 4 p.m. An observation on Generalization, Ilya Sutskever (OpenAI)

- 4 – 4:45 p.m. Panel Discussion (moderated by Alexei Efros)

- 9 – 10 a.m. Understanding the Origins and Taxonomy of Neural Scaling Laws, Yasaman Bahri (Google DeepMind)

- 10 – 11 a.m. Scaling Data-Constrained Language Models, Sasha Rush (Cornell University & Hugging Face)

- 11:30 a.m. – 12:30 p.m. A Theory for Emergence of Complex Skills in Language Models, Sanjeev Arora (Princeton University)

- 2 – 3 p.m. Interpretability via Symbolic Distillation, Miles Cranmer (Flatiron Institute)

- 3:30 – 4:30 p.m. Build an Ecosystem, Not a Monolith, Colin Raffel (University of North Carolina & Hugging Face)

- 4:30 – 5:30 p.m. How to Use Self-Play for Language Models to Improve at Solving Programming Puzzles, Adam Tauman Kalai (Microsoft)

- 9 – 10 a.m. Large Language Models Meet Copyright Law, Pamela Samuelson (UC Berkeley)

- 10 – 10:45 a.m. Panel Discussion (moderated by Shafi Goldwasser)

- 11:15 a.m. – 12:15 p.m. On Localization in Language Models, Yonatan Belinkov (Technion - Israel Institute of Technology)

- 2 – 3 p.m. Language Models as Statisticians, and as Adapted Organisms, Jacob Steinhardt (UC Berkeley)

- 3:30 – 4:30 p.m. Are Aligned Language Models “Adversarially Aligned”?, Nicholas Carlini (Google DeepMind)

- 4:30 – 5:30 p.m. Formalizing Explanations of Neural Network Behaviors, Paul Christiano (Alignment Research Center)

- 9 – 10 a.m. Meaning in the age of large language models, Steven Piantadosi (UC Berkeley)

- 10 – 11 a.m. Word Models to World Models, Josh Tenenbaum (MIT)

- 11:30 a.m. – 12:30 p.m. Beyond Language: Scaling up Robot Ontogeny, Jitendra Malik (UC Berkeley)

- 2 – 3 p.m. Are LLMs the Beginning or End of NLP?, Dan Klein (UC Berkeley)

- 3:30 – 4:30 p.m. Human-AI Interaction in the Age of Large Language Models, Diyi Yang (Stanford University)

- 4:30 – 5:30 p.m. Watermarking of Large Language Models, Scott Aaronson (UT Austin & OpenAI)

- 9 – 10 a.m. In-Context Learning: A Case Study of Simple Function Classes, Gregory Valiant (Stanford University)

- 10 – 11 a.m. Pretraining Task Diversity and the Emergence of Non-Bayesian In-Context Learning for Regression, Surya Ganguli (Stanford University)

- 11:30 a.m. – 12:30 p.m. A data-centric view on reliable generalization: From ImageNet to LAION-5B, Ludwig Schmidt (University of Washington)

- 2 – 3:30 p.m. Short Talks

- 4 – 5 p.m. Short Talks

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

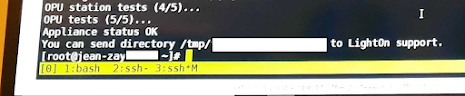

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv